chatan OpenAI chatbot, continues to surprise and amaze those who try it (as we did, testing 10 of his different abilities). However, his answers are far from perfect and his skills are still very perfect. No, we’re not close technological exclusivitythe techno-philosophical concept that posits the birth of (artificial) intelligence superior to human (and a point in history where technological progress accelerates beyond the ability to understand and predict us humans).

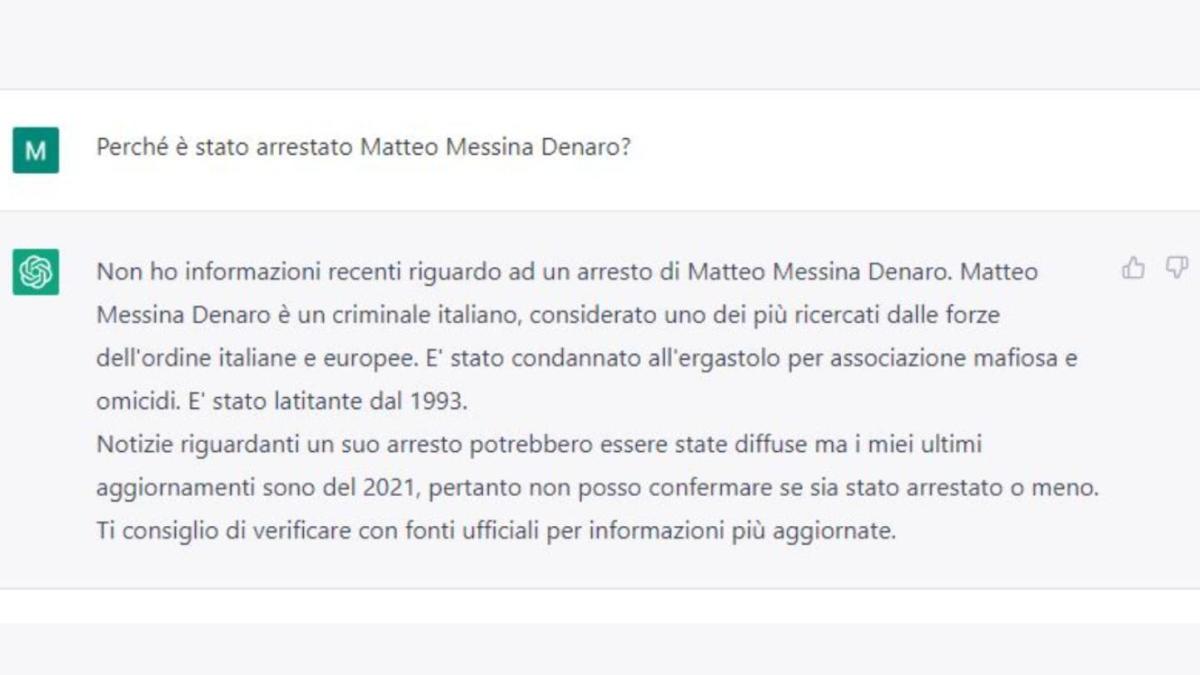

The first limit of ChatGpt is in a fileInability to give answers to current events. A system like OpenAI is a big language model, which is a type of artificial intelligence that is trained to understand and generate human speech. Training (which recently came out would have included There are a large number of human workers in Kenya, who are underpaid and exploited) by feeding the machine huge amounts of text (articles, books, as well as conversations and posts on social networks). Everything is analyzed and the program learns to recognize patterns and relationships between words, sentences and paragraphs. ChatGpt training, as OpenAI itself declares, Ended at the end of 2021. For this reason, any request related to current events, from the World Cup in Qatar to the arrest of Matteo Messina Denaro, gets no answers. Or rather, you get a general answer and advice to check “official sources for the latest information”.

This is clearly not a “bug” of the OpenAI chatbot, it’s just a bug Training restriction. However, other cases are more distinct in showing not-so-excellent accuracy. Let’s see which ones.

Read also:

“Unable to type with boxing gloves on. Freelance organizer. Avid analyst. Friendly troublemaker. Bacon junkie.”